I was fortunate enough after graduation to have an internship with General Dynamics Mission Systems on Kauai doing R&D. Even more so to have been evaluating computer vision programs on the cutting edge of computing. Heterogenous computing systems are becoming a vision for the future as Moore’s Law is seeing an end.

I worked closely with the Xilinx ZCU104 and PYNQ-Z1 evaluation boards and learned a lot about the new framework being developed by Xilinx to bridge the gap between high and low level programming. One of the biggest ideas being reconfigurability and reuasability - something that has yet to be seen in the world of FPGA’s.

Some of the work I did can be found on my Github.

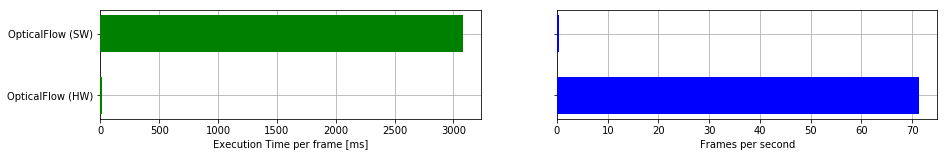

One of the most interesting examples of hardware acceleration was in running optical flow, which is normally used as a tracking mechanism in computer vision. Running on the general purpose ARM processor saw less than a frame per second. Using the hardware overlay resulted in ~60+ FPS. A 60x increase in processing power.

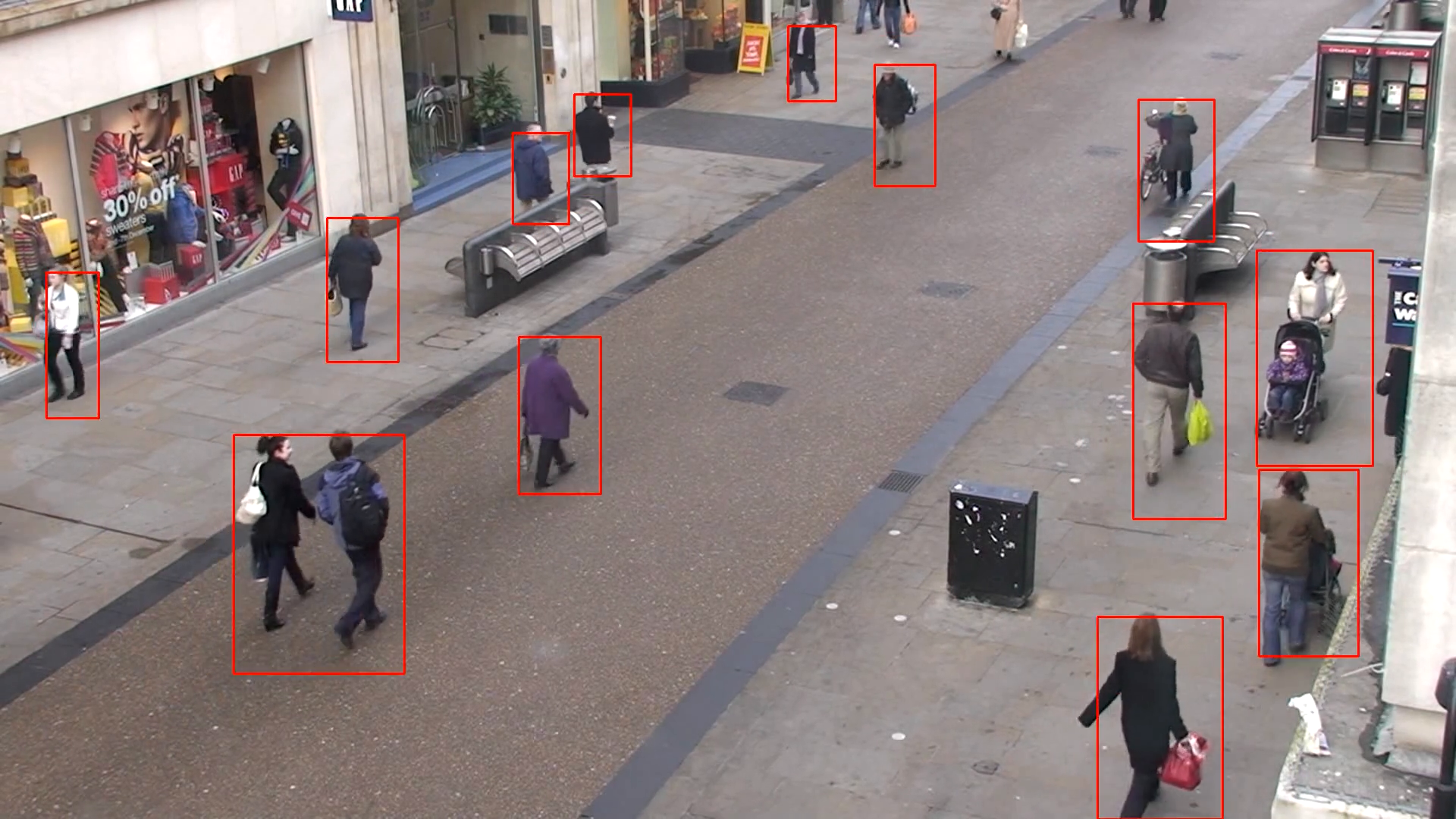

I created a demonstration to track moving pedestrians on a busy sidewalk, which would have run real-time, however there are still some issues Xilinx will need to work out.

- Loading multiple hardware accelerated overlays (eg. optical flow and 2D filtering simultaneously.) The memory management class (xlnk) couldn’t be loaded twice.

- HDMI I/O for the ZCU104 when using the PYNQ-ComputerVision library.

Once that is fixed everything should work great.